Artificial intelligence (AI) is transforming business communications, offering unprecedented opportunities for efficiency and personalization. However, this technological advancement also brings new security challenges, particularly in the form of AI-generated fraud. As organizations increasingly rely on AI-powered tools, it’s crucial to understand and mitigate these emerging risks.

AI-generated fraud poses a significant threat to businesses across industries. According to a recent survey by RingCentral, 72% of respondents believe their company could be targeted by AI-generated voice or video fraud in the next year. This statistic underscores the urgency for organizations to develop robust strategies to protect themselves against these sophisticated attacks.

One of the primary concerns is the potential for AI to create highly convincing deepfakes—manipulated audio or video content that can be used to impersonate individuals or spread misinformation. These deepfakes can be leveraged in various fraudulent activities, from social engineering attacks to financial scams. For instance, criminals could use AI-generated voice recordings to trick employees into divulging sensitive information or authorizing fraudulent transactions.

Furthermore, AI-powered chatbots and virtual assistants, while beneficial for customer service, can also be exploited by bad actors. Malicious entities might use advanced language models to create convincing phishing messages or engage in automated social engineering attacks at scale.

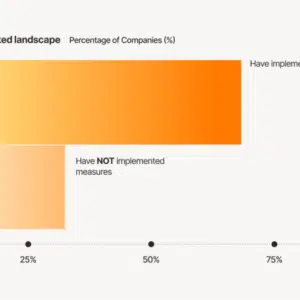

Interestingly, while the perceived risk of AI-generated fraud is high, the survey revealed that over 86% of respondents feel confident in their ability to distinguish between real and AI-generated voice or video content. This confidence, however, may be misplaced, especially as AI technology continues to advance rapidly.

Smaller businesses appear to be particularly vulnerable to AI-generated fraud risks. The survey found that respondents working in companies with 0-20 employees were the least confident in their ability to detect AI fraud, with only 62.75% expressing confidence. This disparity highlights the need for targeted support and education, especially for smaller organizations that may lack the resources or expertise to implement sophisticated fraud detection measures.

To address these challenges, businesses must adopt a proactive approach to AI security. This includes implementing advanced detection technologies, developing comprehensive security protocols, and providing ongoing training to employees. It’s essential to stay informed about the latest AI fraud techniques and continuously update security measures to stay ahead of potential threats.

As AI continues to evolve, so too will the methods used by fraudsters. Organizations must remain vigilant and adaptable in their approach to security. By understanding the risks associated with AI-generated fraud and taking proactive steps to mitigate them, businesses can harness the benefits of AI while safeguarding their operations and maintaining trust with their customers and partners.

What steps is your organization taking to protect against AI-generated fraud? Are you confident in your ability to detect and prevent these sophisticated attacks?

Explore the full report, the state of AI in business communications, today.

Originally published Feb 04, 2025, updated Feb 07, 2025

Source link

No Comment! Be the first one.